Code Budgets

How much does a line of code cost?

The naive answer is that it costs nothing. Bytes are free -- or as close to free as we can come. But it has to cost something. After all, it took time to type in, right? So that takes us to our first rule of "Code Economics": the cost of a line of code is measured in how much time we spend working with it.

How much value is in a line of code?

The naive answer is that the economic value of a line of code is the value of the application (which can be measured various ways) divided by the total line-of-codes (LOC). If you're making $10K per month from a thousand lines of code, each line is bringing in $10.

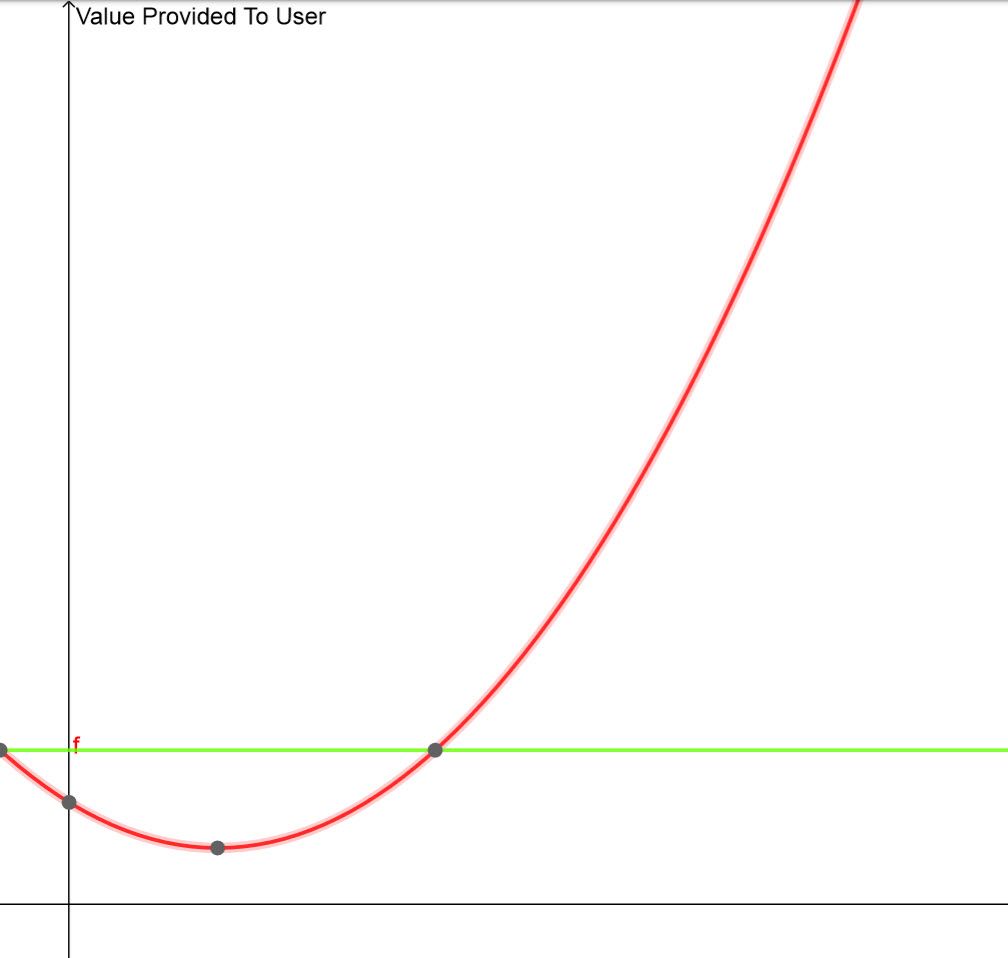

But that's obviously not true. We know that the same application can be written using a thousand, ten thousand, or a hundred thousand LOC. The thing the application does for people -- its value -- and the amount of code it takes to write it? They're not related at all. That takes us to the second rule of "Code Economics": a line of code has no value whatsoever. It only has value as part of some deployable application. The application is the smallest unit of economic value. (Actually, it's UX flow, but for our purposes today an application supports one UX flow that the user finds value interacting with.)

The value of an app is more directly measurable. Yes, sometimes it gets more complicated, like if the business purpose of the app is in-app purchases or referrals, but at some point money comes in and we can trace that money back to the app.

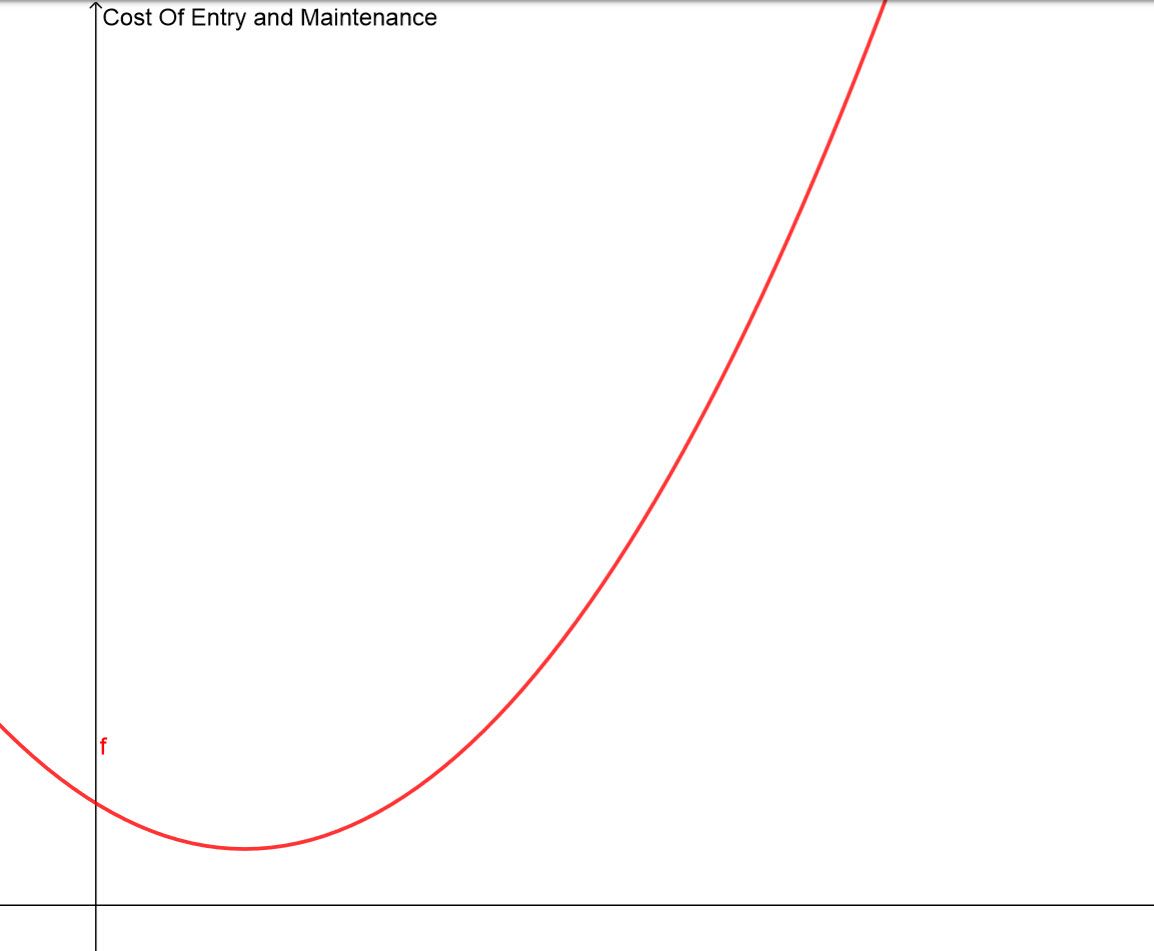

How about the cost of a line of code? Is it just the time we spend typing it in? That's obviously wrong. You have to design it, even if only in your head. You have to test it. You have to deploy it. In fact, once you start thinking about it, there's no limit to the amount of money you can spend on a line of code. If your app is successful, you might spend the rest of your lifetime maintaining that line of code -- looking at it every now and then, tweaking it, changing how it's used.

Of course, most lines of code either work fine or they never provide enough value to make anybody bother with them again. (No economic value = no technical debt) But, assuming you know enough to write an app that has value, each line of code in that app has an potentially-infinite cost.

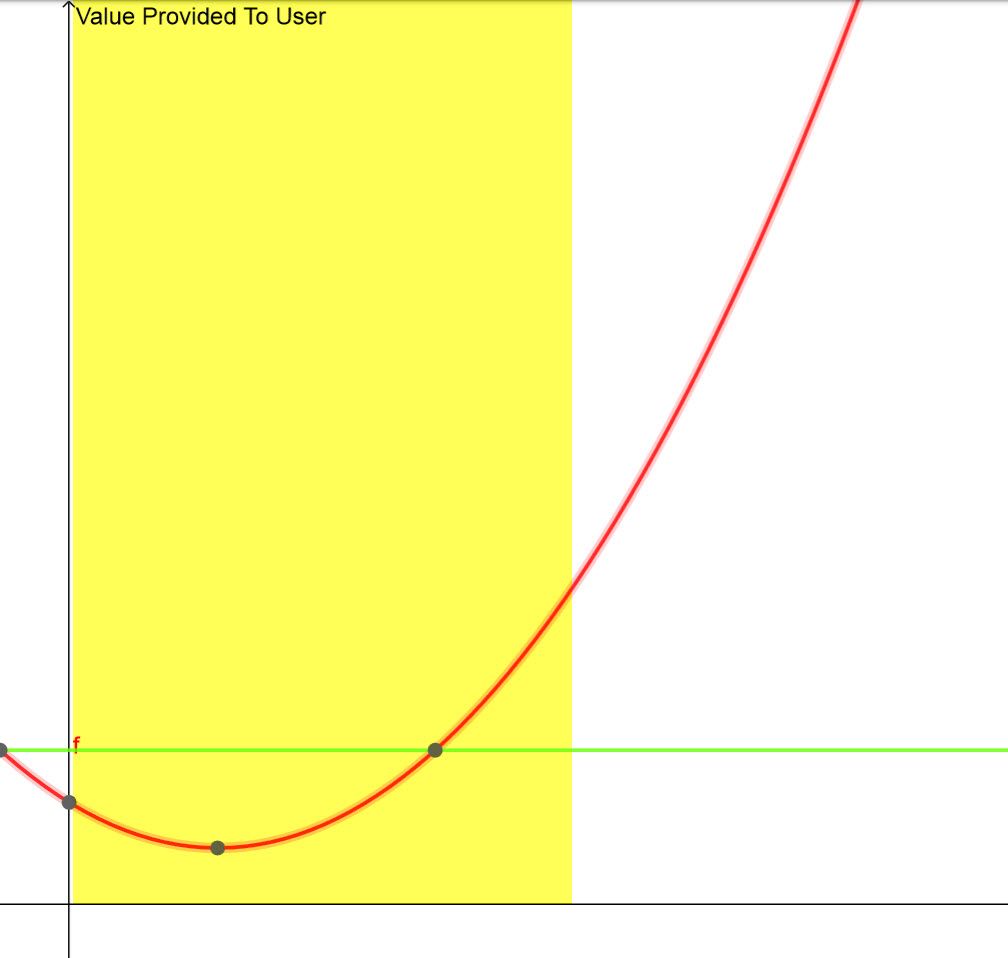

So value comes in app-sized chunks while costs is related to the tiny pieces we use to make that app -- and to a large degree this is under our control.

We have a way in finance of dealing with things that have a limited value but a potentially-unlimited downside. We use a budget. I could go out next month and buy $500 worth of sugar-free bubblegum (I love the stuff), but I don't. I have a budget of $10 per month and I stick to it. That's not because bubblegum is such a huge drain on our finances. It's because little things add up. Without a budget, I have no reasonable way for my wife and I to discuss what's important to us. The budget isn't important. The conversation about values it drives is irreplaceable.

So why aren't we budgeting our code?

So far in our discussion, there's really no reason to pick one set of budget numbers over another. Should we limit our applications to a million lines of code? A hundred? We don't know. We can safely say two things. One, given the same value, more code equals more cost. Two, lines-of-code always grows out-of-control the more we poke at it. This leads to the conclusion in the third rule of Code Economics: the only way to limit the costs of solving a problem using programming is to arbitrarily limit the amount of code humans have to mentally-manipulate solving it.

In a way, this is all common sense. We've been doing it for years. We just haven't been explicit about it. Shared libraries, frameworks, industry standards, and a dozen other things all exist in an effort to limit the amount of code humans have to mentally-manipulate to solve problems.

So why haven't we succeeded? Why can't most programmers today do their job using four or five lines of code?

Because lines-of-code always grows the more we poke at it. When the http protocol first appeared, if you wanted a web page? You brought up a terminal and telnetted a small number of commands. Boom, there's your webpage. Now, there are easily 10, maybe 20 various standards and libraries related to what used to be 10 seconds of work. Fifty years from now there will be 40 standards. Same value, more code. Value stays the same, cost of code continues to increase.

I'd like to suggest a completely random rule that I just made up: Arbitrarily limit lines of code used to solve a problem and write the app with the goal of never touching it again

Furthermore, since I'm making stuff up, how about a 2000-200-20 rule for folks writing microservices? Whatever problem you have to solve using microservices, you have 20 microservices to solve it in. Each of those microservices can be written with no more than 200 lines of unique code.

But wait, I hear you say. That's never going to work! Okay, so among all of those microservices you can have 2,000 lines of code in a shared library. Anything you could ever touch counts as code.

I'm not going to defend my made-up rules aside from saying that budgets always seem arbitrary, and it's not right to create a budget so small that the work can't get done. I don't believe I've done that here -- but I might be wrong! Use other budgets if you like. Hell if I care. But use budgets.

Most programmers probably don't think such a budget is fair, or workable. And I think it's fine. That's fascinating to me and indicates a lack of alignment somewhere in the way we train and socialize programmers.

I'm also suggesting a new thing that has to happen: a code budgeting discussion. Yes, Daniel, your WhizzBang widget is freaking awesome, but it uses 500 lines of code, and that's 500 lines we can't afford right now.

Whoa horsey! Wouldn't I love to hear somebody actually say that! Cost control, in a programming team. Imagine that! And what would come next? I have no idea!

But I could guess.

Don't some problems require a lot of code? Don't some applications -- most, in fact -- require us to come back to them again and again in order to keep them in alignment with changing user needs?

Both statements are true. For complex things, we write components. Those components have executing tests that describe them and are used/shared in multiple places. The more they are used/shared, the better they get. That means that components are just another project. It also means that if you don't have multiple teams using your component, you don't have a component. Component creation should be a decision made between teams and involve a completely new project. After all, they have both cost and value.

What about coming back to tweak the app as the business needs change? Assuming you have a release that is currently demonstrating value -- a big assumption, by the way -- I think you have to timebox it. Three months of verifying that the app got things mostly right. After that? You write a new app, of course. With the budget we're talking about it's not going to be a huge effort, you've already been living in this space, and it's good to rethink things. Code budgets and timelines prevent Second System Syndrome. (I think we went off-the-rails somewhere with Second System Syndrome, but I digress)

There are parts of this essay that are completely made-up. I tried to point those out. There are also parts that are built on decades of experience both coding solutions and watching teams work. What I've seen over-and-over again is that really cool things happen with smart people and creative constraints. Really stupid stuff happens otherwise, because without constraints it's all bike shedding.

You need to start budgeting your code.

Comments ()