Coding Constructs I Now Avoid

Perhaps if we all open-mindedly identify and talk about things we don't need we'll end up doing more of the things we do.

One of the best interview questions I ever heard during a tech interview came out of my own mouth. It was an accident.

After grilling a candidate on the subtleties of Java, the JVM, and all things Eclipse-related, I thought I'd be clever. "What, exactly, is a class, anyway?"

The candidate gave a pretty good answer. Without thinking, I followed up with "And why would you use one?"

I have been thinking about my question and the various answers I've heard ever since that day.

What I got from him was more versions of the things you could do with a class. There are a lot of fun and useful things you can do with classes, but my question was about "why". I can teach my baby chickens to swim, but why would I do so? The question isn't about their capabilities, but about why one capability would or would not be exercised over another. Programming languages and environments do lots of things. They do a lot more things than the average programmer will ever need, so the real question is about discrimination, taste, and reasons to do things, not the things themselves. How do we exclude things? Why do we choose to include them?

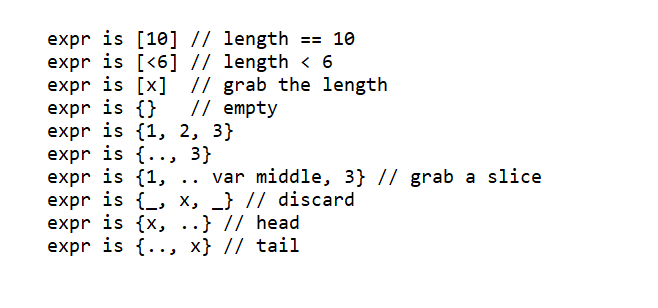

I thought again about that when this weekend when I read that list patterns are heading to the upcoming version of C#, like so:

Everybody wants to add stuff to their catalog. People only want the computer to do one thing or so, but enterprises (and coders) buy things based on huge lists of things they might need one day. Everybody's got a rocking how story. I'm just not hearing a lot of why, at least outside of the usual "We all do things exactly this way!"

The C# news came before another event this weekend: my friend Bob wrote an essay on if/else/switch. This had this gem from Bob that caused the heads of several programmers worldwide to explode:

Place the if/else cases in a factory object that creates a polymorphic object for each variant. Create the factory in ‘main’ and pass it into your app. That will ensure that the if/else chain occurs only once.

As usual, the programmers were kind with Bob. We're an open-minded bunch. /s The basic feedback was along the lines of "who the hell would ever code like that?"

Bob did yeoman's work with the essay, and the entire drama boiled down to:

- Twitter sucks for anything more complicated than "rain is wet", and even then, I'd be careful

- Context is king. Bob was providing the super-duper, we-live-in-a-complex world answer in the tweet. The essay was much more nuanced

- You really don't want "bald" if/then/else/switch statements. The reason you'd use them is for some other thing, and thinking about that other thing is much more interesting and important than some kind of programming construct

Bob puts his reasoning like this:

...However, I have a hard time believing that the business rules of the system are not using that gender code for making policy decisions. My fear is that the if/else/switch chain that the author was asking about is replicated in many more places within the code...That's a reasonable thing for an OO-guy to believe, and I'm with him: the reason we construct complex graphs of meaning is, well, to get some kind of meaning out of them. If you're switching on this thing in this spot, the safest thing to do is to assume you're going to be switching on it somewhere else too. That stuff needs to be abstracted away so you never have to think about it again.

This is the classic polymorphic argument: we don't need no stinking switch statements because whatever we might switch on is actually a business rule and business rules should always be an inviolable part of the construct itself, not something you'd hack away at in an oddball method somewhere.

My problem with his essay is I just don't solve problems like that anymore.

let (|DivisibleBy|_|) by n = if n%by=0 then Some DivisibleBy else None

let findMatch = function

| DivisibleBy 3 & DivisibleBy 5 -> "FizzBuzz"

| DivisibleBy 3 -> "Fizz"

| DivisibleBy 5 -> "Buzz"

| _ -> ""

let fizzBuzz n = n |> Seq.map findMatch |> Seq.iteri (printfn "%i %s")

[<EntryPoint>]

let main argv =

fizzBuzz [1..100]

0 // we're good. Return 0 to indicate success back to OSSticking everything into types hurts, so I don't do that anymore. I respect and understand those who do, and I think given the paradigm of how they're coding they're doing the right thing, but heading down that road always seems to end in heartache, complexity, and pain, so I stopped doing that. Instead, given my druthers, I code in pure FP and using something I call Incremental Strong Typing. Sticking complexity into type systems is a great thing. It's so great you should only do it under certain circumstances. Assuming those circumstances always exist, much like assuming they never exist, never seems to work out well for practitioners over the long run.

Maybe if we talked about things we avoid and the reasons why we avoid them, we can find common ground.

Here are things I try not to do and the reasons why. Of course, on occasion I do all of these things, and on those occasions I use various cool language features to make that happen. It's just as rare as I can make it.

Things I try not to do in code

- If/then/else/switch. Strict pattern-matching guarantees that all code paths are exhausted, and if I'm ever going to need those code paths, I define them as soon as possible and never worry about them again. This is Bob's polymorphic argument stated differently. If you have to think about it once, code it up and never think about it again. Where we part ways is that my argument is that for most work, I don't want nor care. Instead of assuming I might need it somewhere else, I only code chunks of code that have specific business meaning in only one context. My scope can't be all of existence. I don't know the future the universe holds for me or this code. Trying to cover all of the bases once and forever looks like a fool's game.

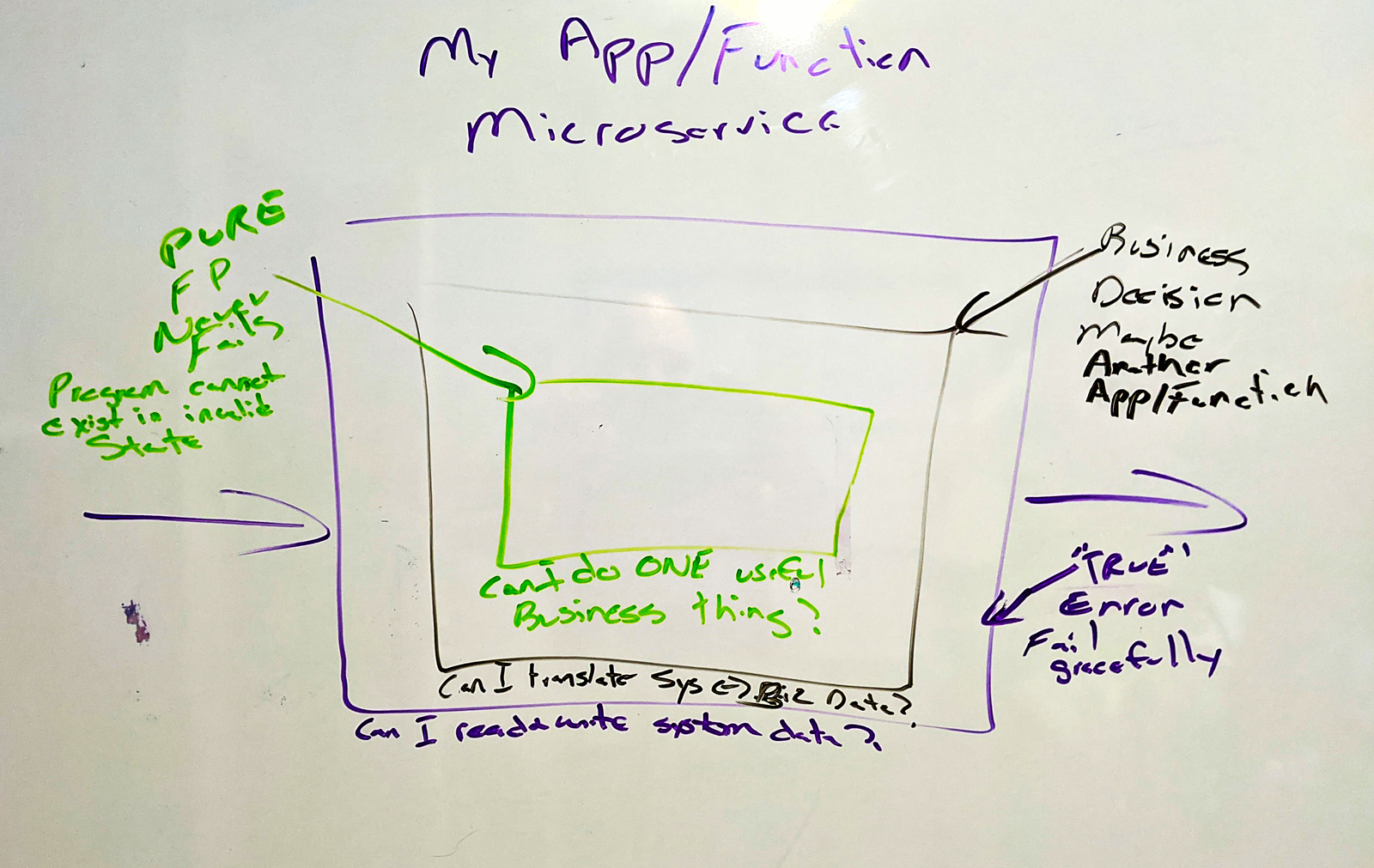

- try/catch/options. There are two and only two places I'll use a try/catch: at the outside level of a microservice to figure out what to do if the matrix is unkind and something bad happens, and when I have to coerce things from an I/O stream into a type to do something later. That's it. Wow, I love all of the various ways we've created to handle errors. We seem to be so good at creating errors that we're always making cool new stuff to handle them. Love me some monads. Looking forward to playing with algebraic effects. Typed exceptions are neat. But all of that looks to me like a long-winded way of not addressing problems when they actually occur, instead shipping them off to some other poor schmuck farther down in the code to figure things out. Far too many times I end up being my own schmuck. I don't do that anymore. Write error-free code by writing error-free code.

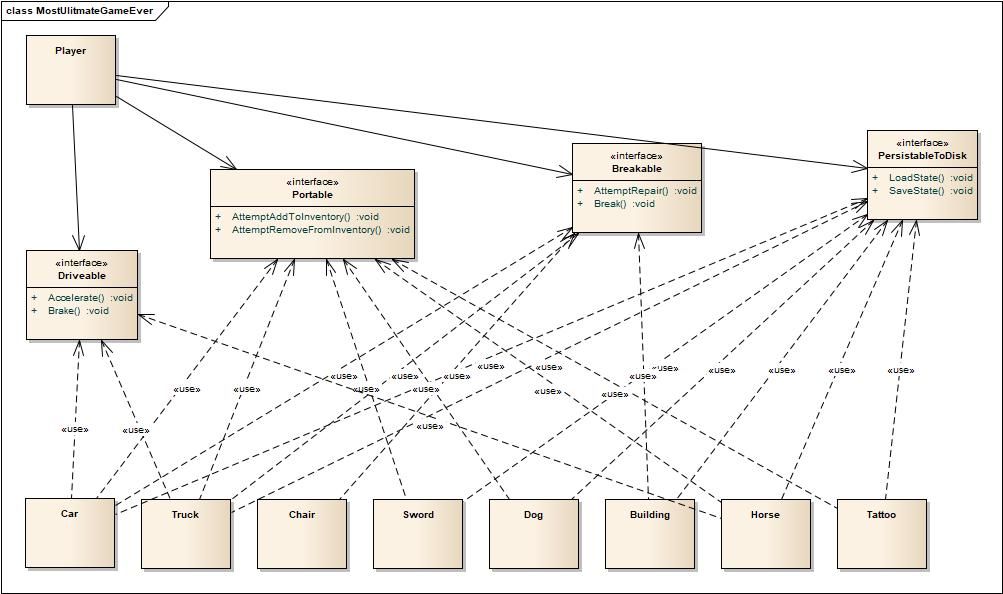

- Inheritance. Damn I loved inheritance when I first saw it. A car is a type of vehicle, and I can make the code understand that! Woo hoo! Later on, for me this idea reached peak usefulness when working with GUIs and Operating Systems. A DialogBox is a type of Window. A Window is a type of MessagePump. Cool, now there's a bunch of things I don't have to worry about. In extremely tightly-confined contexts like an O/S where everything is created and maintained by a specific vendor, inheritance is a freaking miracle. The problem is that everybody thinks they're coding in a context like that when in fact nobody is. The entire concept is a mirage. A car is also a type of InventoryItem. It's also a type of ManufacturingArt. It's a type of InsuredItem. I can't think of all the shit any one thing might be a type of, and you can't either. Sure, we can hard-code something up and pretend that it's correct, but it's never correct over the long-run, not even for those O/S guys. Like most everything on this list, it's feels really good at first but tends not to age so well.

- Interfaces. Do it or don't do it, but don't promise to do it and then do it later, perhaps never. Certainly don't make the promise for me. The problem with interfaces is that they get out-of-hand so quickly. You want to define 4 methods that every class (of a certain kind) will do? Awesome! Who does that? Instead I'm always stuck with every class having to have a factory object and implement this list of twenty-seven things in three interfaces that some architect thought up while he was modeling the toughest part of our project. Ain't nobody got no time for that. This is the genesis of Bob's answer: in complex systems dealing with complex issues, you don't want to think more than once about important decisions, otherwise you'll inconsistently apply important rules in hard-to-find-and-understand ways. It's back to the one-ring-to-rule-them-all thinking that permeates so much of modern Object-Oriented coding. He's right. He's wrong. That hurts. Don't do that.

- For/Next loops. If you've got a collection of stuff in most any modern language, you've got methods to work on it, things like For/Each. Use them. Broadening this idea, in general, I should never care about the length of a collection. Empty collection? Okay, fine. Infinite collection? Works for me. You've got a bunch of stuff and I've got things to do with it. That's all I should care about, not the index, buffer size, or so on. Those things are important, and there are certainly places where I'd want an array with an index. Most of the time, however, I just want to hug through this big sack of things. you gave me (As far as I/O and buffer size goes, those things are critical, just not here, down in the code where we're working. Do one thing at a time. See my try/catch item) Every now and then, on extremely rare occasions, I'll need to get down in the weeds with a bag of stuff. Then I use recursion and pattern-matching, the way the Great Pumpkin intended. If I'm doing that I should be on yellow alert, though. I may be making things much more complex than they need be.

// Your language should be able to do most anything

// with a bag of stuff without needing a for/next

Seq.mapFoldBack mapping source state

```

- Mutability. The first thing I learned as a programmer was something like "X=7", followed immediately by something like "X=8" See! It's a feature! "X" can be whatever you want it to be! This was one of the first things I learned, and it was one of the last things I finally unlearned. Yes, variables can mean anything, but why the hell would I want them to do that? Turns out this was a difference in focus. My teachers were trying to instill in me the idea of working at a meta level. You use X and then you reason about X in generic terms. But don't actually use "X" though! You gotta have a better name than that. After all, you use the variable name to reason about the code, so make it a good one! Later on, as I entered the vast pile of steaming fun that the type wars are, I realized that yay, X could be 7, X could be "dog", X could be anything I wanted. It could change value and type as I wanted. Did I mention the part about being sure you used good names?

I finally came full-circle and realized that it was more important that I identified a small number of things that were important and labeled them. If I let them change then I found out the labels tended not to work so well. Limiting the number of symbols was actually much more useful as a coder than what kinds of symbols they were or what was current stuck in them. Stick something in there, give it a name, and don't mess with it. If you've got too many things you're sticking names on, it becomes difficult to reason about. Life becomes sad. Don't do that. See Code Cognitive Load essay. If you'd rather watch and hear a rant about this, I also recently did an interview. I don't care what language or tools you use, but for the love of all things good in the world, learn to be nice to yourself and future generations of programmers. We gotta stop making these freaking huge messes we make. I found I don't need mutability, so I don't do that. - Creating libraries and frameworks. Good grief, there should be a support group for this. I am trying to quit. It is a struggle. I find that whatever I'm doing, I want to make it generic and re-use it. It's much easier to make things generic and code up lots of things that I might one day need than it actually is to quickly solve problems in code and figure out if I'm actually doing something useful for the people who are paying me, so I do things that are fun that I like doing. As I have watched myself and many other people in the technology sector work, I have come to realize that at a deep level, most of us, most of the time, should think more and work less. Extremely so. There are entire battalions of tech people who should stay home, take the day off, go fishing ... anything but do the things they think of when they think of the word "work". I am tempted to estimate the percentage of people in any large tech organization who are like that, but it's such a large percentage that it would be unbelievable to those who don't get it. It would sound preposterous, fantastical. The best I can do is try to fight this urge in myself. Simply because I can imagine something to be useful doesn't make it so. Maybe I need a mantra.

This is not meant as a dogmatic list. It's just stuff I avoid and why I avoid it. Instead of what your favorite language might do next year or the coolest way to handle the ultimate case of PROBLEM_X, what are things you avoid and why? Perhaps if we all open-mindedly identify and talk about things we don't need we'll end up doing more of the things we do.

Comments ()