For The Love Of All That's Holy, Use CCL To Control Complexity In Your Systems

<rant>Two years ago I sat in on a security meeting. The subject was protecting the code and associated websites from attack.

"I'd just attack the npm packaging system, introducing subtle changes in several that would work together to do whatever I wanted."

All I got was blank stares, and this was from professionals. From this week's reading:

Unlike traditional typosquatting attacks that rely on social engineering tactics or the victim misspelling a package name, this particular supply chain attack is more sophisticated as it needed no action by the victim, who automatically received the malicious packages.

This is because the attack leveraged a unique design flaw of the open-source ecosystems called dependency confusion.

Even using things like Test-Driven Development, programmers can only reason about a small part of the code in front of him in the IDE. (In fact, one of the reasons TDD is so successful is because programmers have no idea at how much they suck at actually understanding what they're doing).

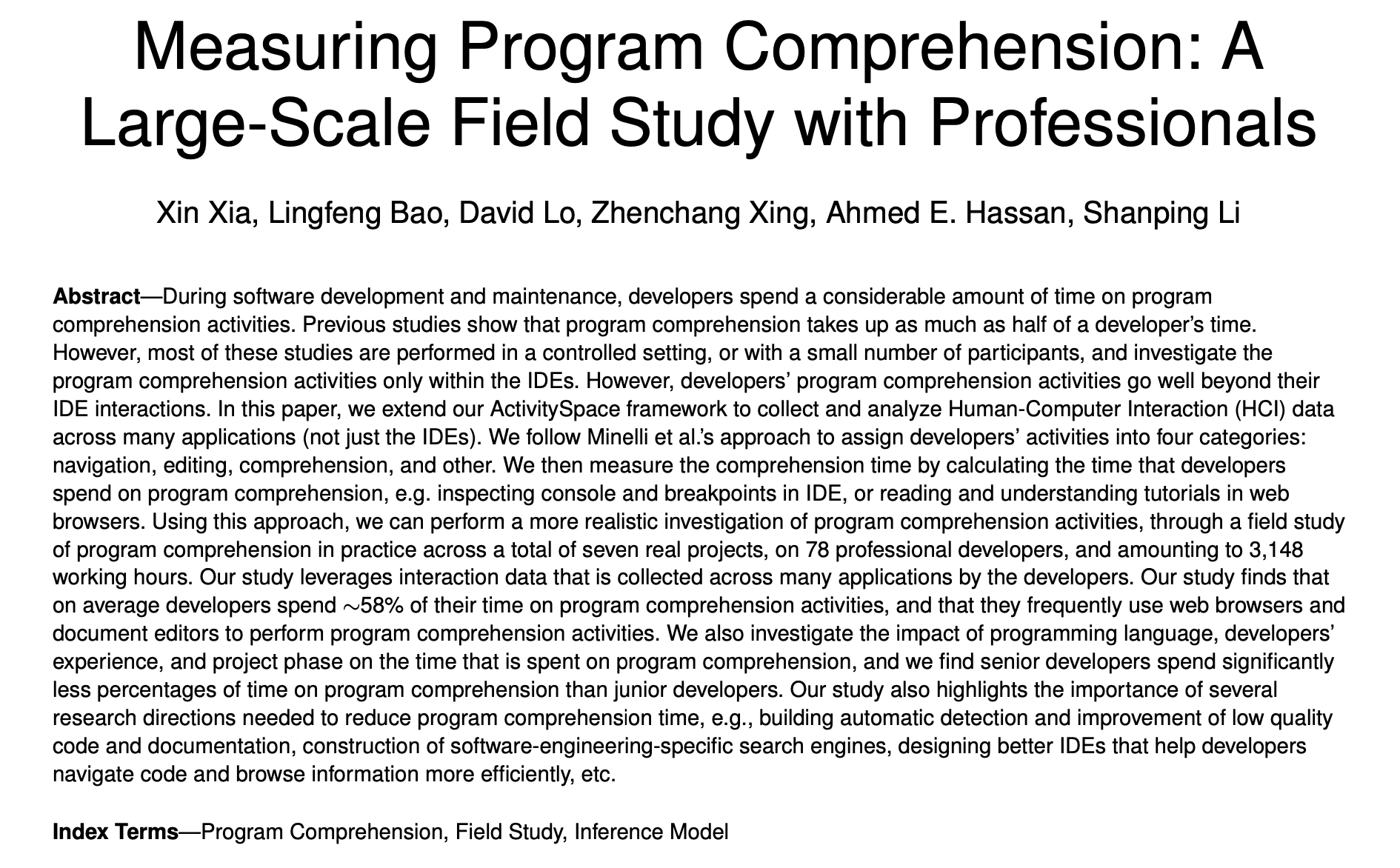

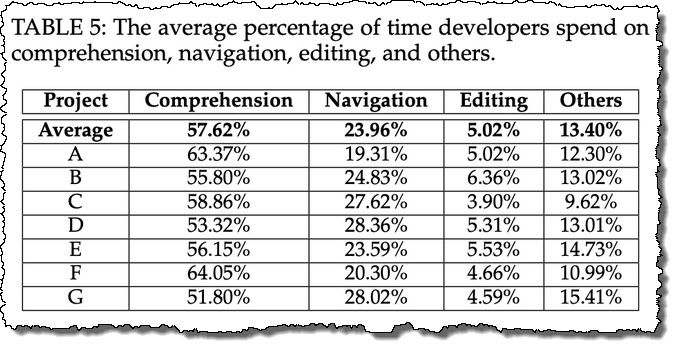

From a recent study:

Here's the takeaway graphic:

Note: this result is seeking out a mean, regular coders writing regular code. Your mileage may vary.

Why is this? Let's take two code samples:

echo Hello World

#include <cstdio>

int main()

{

printf("hello from %s!\n", "HelloWorld");

return 0;

}

In the first example, how many symbols is the programmer manipulating to reach their goal? Only one, "echo" In the second example, how many symbols are being manipulated?

I counted seven, but I wasn't trying to be strict.

What do these seven involve? There's namespaces, libraries, standard function signatures, string substitution, OS return values, and so on.

Do any of these matter to the new C# programmer? Of course not! That's why it's called "Hello World". You're supposed to be able to type in a few symbols and start doing something useful right away.

The waters get murky very quickly after that. Let's say your boss sends you an email:

"Jenkins, add the cover sheet to all of those TPS reports"

Something goes wrong. The boss is unhappy. You two have a conversation about what you did that was mistaken.

Suppose instead you're looking at the following code:

WorkingStack.Reports.AddSheet(cover);

The boss is mad because it's not working right.

You can certainly go into the boss's office and have that same conversation, just this time over code instead of an email. In fact, that's what you have to do. Without a bunch more source code, what the hell does that C# code do, anyway? You don't know. It says it does the same thing as the boss wanted, but for all you know it's mailing off your tax returns to Russian hackers.

Most of modern programming is spent creating and consuming fake abstractions that are much more leaky and buggy than we'd like admit. In fact, it's grown far, far beyond our ability to reason about. It's magic. We spend a lot of time pretending that this isn't the case, then cursing when reality rears its ugly head again.

When I was writing my second book, I had to address this problem because reasoning about code and controlling complexity is the number one problem in software development today. It's the reason we have buildings full of hundreds of developers wasting a lot of time and money. Our incentives are wrong.

The best thing to look for in any professional, doctor, lawyer, coder, etc is their ability to not engage with a problem, instead solving it in a simple and non-intrusive way. The worst behavior from professionals are the folks who are going to do a lot of work no matter what. These guys look busy, and they’re deep in a bunch of technically wonky stuff that nobody understands, so naturally they look like they know what they’re doing and are doing a good job. The guy who shows up in flip-flops and after a five-minute conversation solves your problem? He’s just some smart eleck showman, probably a con man.

We don’t teach coders the one skill they need most of all: adding incremental complexity as-needed. Nobody talks about choosing what to add and why. Nobody talks about ways to understand you’ve gone too far (except for a few folks like me. Apologies for the shameless plug.)

For some odd reason, discussions on frameworks and complexity always devolve into some version of “Dang kids!” vs. “Talentless luddite!” As many people have pointed out, not only do we not teach or talk about incremental complexity, if you don’t have the appropriate buzzwords in your CV, you don’t get hired. So BigCorps naturally end up with scads of people who did well on scads of tech that somebody decide they had to use/learn but nobody very good at actually making things happen.

This can't continue, and I want to do my part in making it end. The answer I came up is something I call it Code Cognitive Load (CCL). It's the amount of risk you take on as a programmer looking at any piece of code to manipulate it, whether coding fresh or doing maintenance on existing code.

- It's scoped first by method/function and then by compilation unit. There's no other scoping (namespace, class, module, etc.)

- To compute, you add up four things: symbols you are required to look at to do your work, exceptions that using those symbols may throw, any editable code underneath that you may have to read or write for those symbols to work, and exceptions that code may throw

You can see for the first Hello World example, there's only one symbol, echo, and there's only one general type of exception, and that's related to system resources. For the second example, the count easily goes into the dozens.

Why is this related to the security problem I led off with? Because if you have code you can't reason about, you don't just have a risk for bugs, it's a security risk too. The code doesn't care whether it's busy screwing up your beautiful solution or hacking your local network. You can't reason about it. Period. That's the problem. It's doing whatever it's coded to do.

Do npm packages count as "editable code underneath that I have to read or write for these symbols to work"? Sure, if you're downloading code and compiling it, you may have to edit it. It's a risk. If you're just hotlinking to a dll or something, that's different. If you're using precompiled code, whatever you've got, you've got. It may be buggy as hell and it may do nasty things that make you sad and drink by yourself late at night, but it's not a complexity issue, it's some other kind of issue, security, performance, and so on. Don't confuse them. You address and work with the problems of that precompiled library in a totally different way than you would with code you may one day have to plow through.

Code Cogntivie Load (CCL) is not a good/bad metric. It's a measure of the risk in complexity you're assuming to do whatever work you have to do. Some problems may naturally require a great deal of complexity risk. Others not so much. That's your call, not mine.

The point is that you're measuring it, instead of just ignoring it until something goes wrong. Is your solution doing mostly the same thing but your CCL is going through the roof? You're most likely doing something wrong, since your code's value is staying the same while your coding risk is dramatically increasing.

There's no way of knowing whether code is appropriately complex or not, but we can (and should) measure how much complexity risk we're taking on ourselves and our downstream maintenance workers.

This will change the way you code and the way you think about coding. Good. Looking at the software landscape today, things need changing.

</rant>

Comments ()