The Overlords Finally Showed Up

After decades of promises, AI has finally leveled up. What does that mean for us?

Back in the day, all the tech folks hung out online at a place called slashdot (/. – CLI folks will get it)

Whenever some radically cool new tech showed up, invariably there would be a wag who would post "I for one welcome our new X overlords"

This quote began back in 1905 with H.G. Wells' short story "Empire of the Ants" and has taken on a life of its own, as shown in this Simpsons clip. The idea being that no matter how bad the end of civilization is, you can always switch sides.

For the record, I do not fear that any sort of Artificial Intelligence will hurt us or fight us to take over the world. I have never feared that. Instead, I fear that it will do exactly what we want it to do. This is a much worse situation.

I've been creating content for folks for a long time. One of the things this has taught me is how much all of us are on autopilot throughout the day. There's nothing wrong with that. In fact, there's no way we can be thinking through stuff in detail all of the time. So the brain hacks us into just deep diving enough every now and then to not look like a moron.

We deep dive much less than we think we do. I think programmers are more aware of that than others since we have to do a lot of it.

What this all condenses out to is that each person, and society as a whole, advances in knowledge and capability by chance interactions with others where both people are thinking deeply about a problem and each person has something useful to offer. Some folks call this a person's "luck surface". Companies have also been known to design offices to increase serendipitous encounters, or "collisions". You can't make this kind of innovation and advancement happen. It's not just a matter of being smarter or trying harder. Luck plays a huge role.

The Dark Ages were a time where humanity forgot how to read and write, where one person, a priest, was the sole person in your social life that could tell you truth from fiction. The Enlightenment changed all of that by re-teaching literacy. People became individually responsible for using reason and logic to try to improve their own lives. The church was quite afraid of this because of the chaos they believed would result. They were right: lots of people thinking for themselves is extremely chaotic and can even be unstable. But overall they were terribly wrong. It was only through the management of that chaos and instability that we have all we have today.

This new tech will take any issue and argue whatever side you'd like, producing paragraphs of reasonably-argued prose.

That effectively means that online communication is becoming something where none of us ever have to worry about happenstance encounters that change our understanding of the universe. Instead, whatever we need to believe, a computer can argue for us. If I take position A on something and you take position B, it's possible that we can both believe the other person is conversing in good faith. There's a mutual exploration. It's very difficult to accomplish this same group exploration remotely. Since most strangers are never going to take the time to read a five paragraph well-argued rebuttal, and since a person can generate one without having to do anything at all, we've effectively lost out ability to publicly discuss matters of any importance. (As AI integrates into our neural net this will apply to private conversations as well)

I think there's a future for folks who self-organize into interlocking circles of trust. But for 99% or so of people, many of whom are barely literate now, we are effectively taking away their ability to use reason and logic to make their lives happier. Want to go to a good restaurant? Google can tell you. By the way, what does "good restaurant" mean? You don't have to care as long as Google cares.

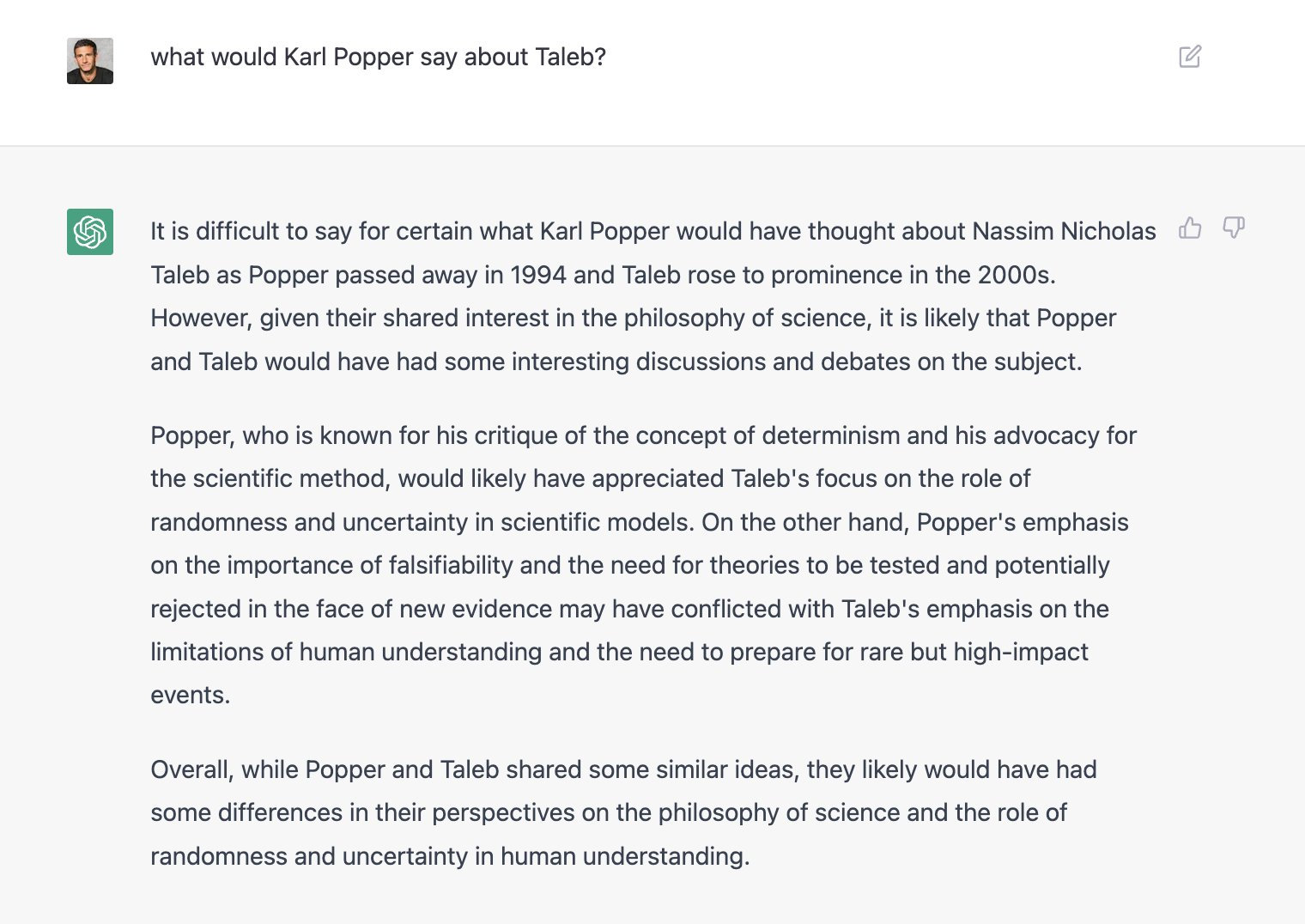

This was a cute example until I start seeing an AI that can argue Taleb vs. Popper, picking either side, or automated comments in reply to reading one of @paulg's essays.

It's like tech is making each one of us our own little village with a computer priest. Whatever we need to know, however we need to feel, that's the source for it. We are becoming illiterate in a world of literacy and miracles. We can still read words, but the words no longer have the ability to radically change our lives. Instead they are all carefully curated and crafted for us.

I was reading this comment on lobsters the other day about using a Chat AI to write code. Several times, and in different ways, the author warned the potential future user to "be careful!"

It is also extremely easy to gaslight yourself with it, because the human brain will pattern-match and anthropomorphize all the things.

I was nodding along, thinking "This is the problem! People are social animals that are made to work with other people, not plausible text!" Then I see this in my Twitter feed:

Hang on. If I'm shopping for pet carriers and I find this site, I'm looking for good stories about why I should get a certain kind of pet carrier, right? Somebody tell me how you found this product and why it was a good idea. From one perspective, they don't have to be true stories, right?

But then why have the site at all? Just tell me to buy something. If the stories aren't true, why am I spending all of my valuable time when I can immediately reach my goal? What is the purpose of all of this text if it is not to convince me that there is a real person out there with a narrative that I might one day engage with? It's beyond fraud. Fraud is when a person is purposefully tricked about one particular thing for money. This is next level. This is an entirely invented universe just for me. Even more perplexing, maybe I like that.

Sam Altman, a pretty smart guy, sees this dilemma and says we have a decision to make:

Interesting watching people start to debate whether powerful AI systems should behave in the way users want or their creators intend. the question of whose values we align these systems to will be one of the most important debates society ever has...

I think the sadly hilarious thing is that either way, we lose. There's not a correct choice here, or even one choice that's better than the other. To presuppose that would be to play God, to be able to make decisions about the entire planet, then be able to understand and enforce them. That's way outside my pay grade.

I think the worst part of this is how completely insane I probably sound to folks. "Spammers gotta spam" is a world I can live with. But as I was talking about on Discord the other day, systems evolve, and spammers are evolving into something we are not able to recognize as spam. We will continue being clever and spotting these kinds of things until the day we don't. At that point it'll all become invisible to us. We'll be literate, we may spend our day doing nothing but reading, yet we are prevented by our technology of consuming meaningful text.

To be clear, I'm an optimist long-term, but short-term I see a lot of really bad things heading our way, sadly.

I, for one, do not welcome our new overlords.

P.S. One of the reasons this topic is so difficult to write about is that everybody already thinks they know what's going on. Humans are reductionist by nature. We do an extremely poor job of reasoning about millions of microscopic interactions happening per second all over the planet, yet this is the situation we are presented with. Sam Altman thinks we should choose between two paths. Commentator X thinks we should choose path Y in order to fix problem Z.

This is the way we think. No human alive can process multivariate massive simulations in order to make some sort of reasonable commentary about what's going on, not with AI but with the human-AI interactive experiment we've embarked on. You can't say AI is "good". You can't say it's "bad". When I speak of AI being an unmitigated existential threat to humanity, hell, that might be a good or bad thing. None of us knows. The simple fact that we think we know is the root of the problem that I worry about.

For what it's worth, the best path to analysis of this problem that I've come up with is to imagine an alien ecosystem. Instead of a climate with zones, populations, creatures, and such, we have people with cultures, languages, life experiences, and so forth interacting real time with hundreds or thousands of apps with their own purposes and stories. As I pointed out 15 years ago, evolution is the only way I know to really look at the situation, and even then we're stuck trying to draw a circle around some area and then guess a selection criteria that might be at work. That's the best I got.

Comments ()