The Platform Is The Enemy

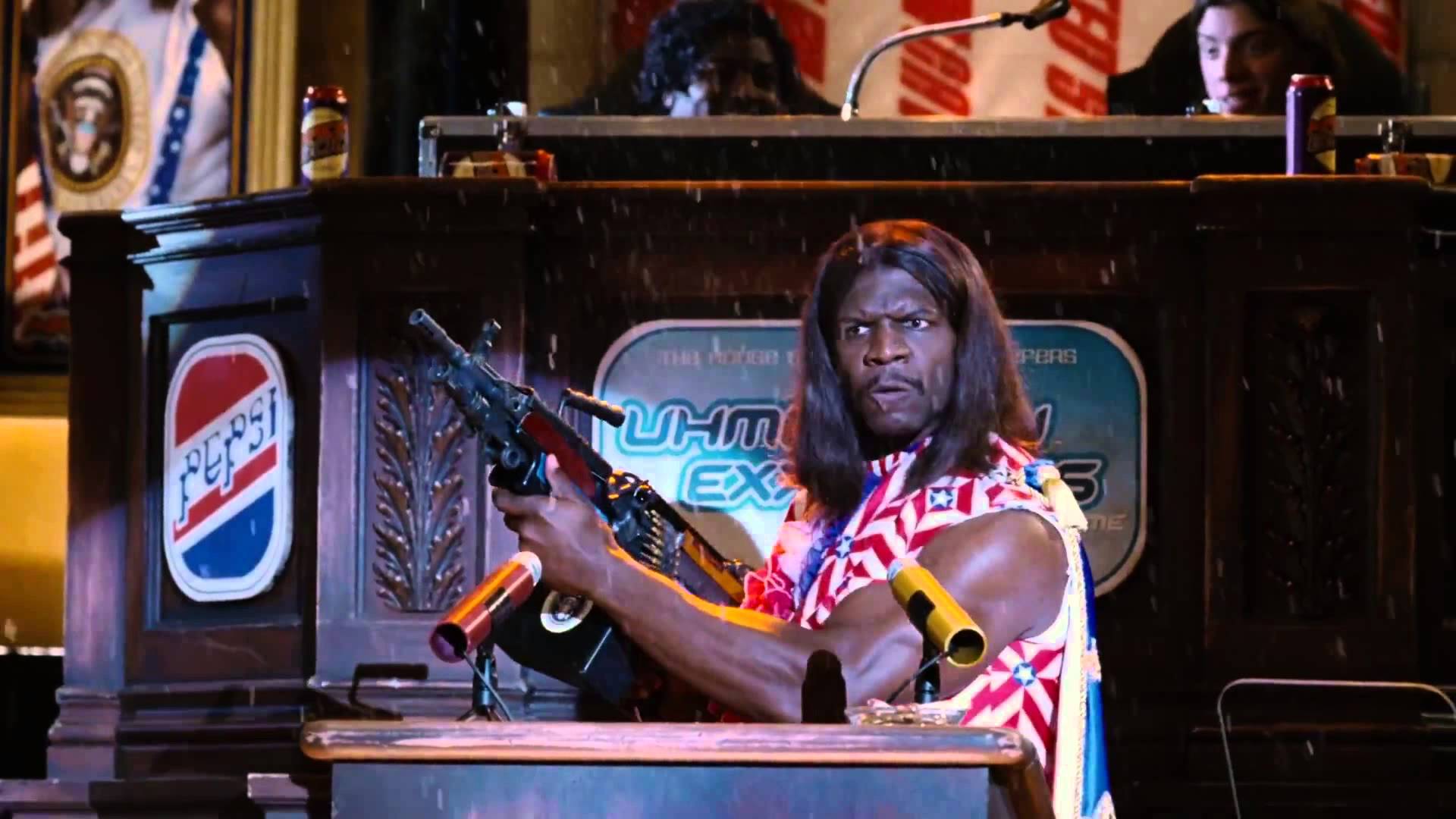

The premise of the movie "Idiocracy" is simple: in the future mankind has de-evolved into morons. Technology does so much for everybody that nobody knows how it all works anymore. If we can't fix it, we're all going to die.

One character asks the other what he likes, The answer is money.

"I can't believe you like money too!" the first character says without irony, "We should hang out!"

The gag here is that of course, most everybody likes money. If you reduce all of your life enough, it's just food, sex, money, and looking cool. But who would want to do that? Over the centuries, humans have created massively-complex societies because everybody has different things they like doing and thinking about, but all of that complexity can be reduced to, well, an idiocracy if you try hard enough.

The movie, however, is just a joke, right? We would never allow that to happen, of course, because that's not the goal of technology. Technology's goal is to make us better, not dumber.

Wait one. Is that true? What is the goal of technology, anyway? Has anybody ever clearly stated it?

Recently I've heard two goals:

- The goal of technology is to become a brain extension, helping you to decide what to do and then helping you get it done.

- The goal of technology is to become a hand-held power tool, helping you accomplish the things you've already decided to do

That's not the same thing. It turns out the difference is critical.

The old goal was much simpler: make something people want. I like that goal! It boils down the job of creating technology to the most important parts, need and ability. But was that sustainable? At the end of the day, don't we always end up making some combination of stuff that either helps us make decisions or helps us implement decisions we've already made? And aren't the two fundamentally incompatible in a future society?

Yelp tells you which restaurant to go to. Your GPS automatically takes you there. These are not just different problems, they're different kinds of problems. Getting from point A to point B is a matter of math and geometry. Which restaurant is the best tonight? You could spend hours debating that with friends.

If you reduce anything down enough it becomes idiotic. Each piece of technology we deploy can have the goal of helping us do what we've already decided or helping us decide what to do. The first option leaves the thinking up to us. The second option "helps" us think.

You like money too? Wow! I like money! We should hang out!

Human brains are not computers. Brains are designed to help us survive and pass on our genes using the minimum amount of energy available. If the GPS takes me where I'm going, I don't need to know how to use maps anymore. So I stop knowing how to use maps. Dump those neurons, they're not needed. If Yelp picks the restaurants for me enough, I stop having nuanced preferences about restaurants. That energy expenditure is no longer needed for survival and reproduction. Dump those neurons. Over time people stop caring about the tiny details of what the difference is between a good and a great restaurant. Yelp handles that.

For some folks, who cares? It's food. Go eat it. For other folks, picking the right place can be a serious undertaking, worthy of heavy thought and consideration. But if over the years apps like Yelp boil all of that down to four or five stars, then our collective brain is not going to bother with it. Human brains are not computers. If computers do the work for us, we turn off those neurons and save energy.

Meanwhile, on social media there's currently this huge discussion. One bunch of folks says that social media is being overbearing in its censorship of fringe and sometimes hateful opinions. The other bunch of folks says social media is a festering sore full of people who are ugly, hateful, and abusive to those weakest among us. The community has to set standards.

There doesn't have to be a right and wrong here. I think the crucial thing to to understand that both sides can be entirely correct. We are dealing with the same kind of question.

All three of these topics -- whether humanity is becoming idiots or not, what the ultimate goal of technology is or should be, and how social media should work -- are intricately related. They're related because of this: the platform is the enemy.

The minute we create a platform for something, whether it's rating movies, tracking projects, or chatting with friends about work, as that platform takes over mindshare, the assumption becomes that this is a solved problem.

The telephone was great. Once we had the telephone, people didn't have to worry about how to talk to people far away anymore. Just pick up the phone. Solved problem.

Facebook is great. Once we had Facebook, people didn't have to worry about how to interact with their friends in a social setting anymore. Just click on the little FB notification (Which seems to be always flashing for some reason to get my attention) Solved problem?

But these are entirely different things! With the phone, I know who I want to call and why. I push buttons and we are connected. The tech helps me do what I've already decided to do. With Facebook, on the other hand, they get paid to show me things in a certain order. The premise is that I'm waiting (or "exploring" if you prefer) until I find something to interact with. The phone is a tool for me to use. I am the tool Facebook is using. I am no longer acting. I am reacting.

And even if they weren't paid, interacting with friends socially is an extremely complex affair. What kind of mood are they in? What's their life history? What things are bad to bring up? How does their body language look? Facebook's gimmick is "Hey, we've reduced all of this to bits and bytes, and we'll even show you what bits and bytes to look at next!"

Solved problem.

Many, many people do not use the internet, the internet uses them. And this percentage is constantly growing.

Just like the restaurant example, maybe that's fine. I have friends, I have opinions, who cares? It's all idle chat anyway.

That logic can be true for a bunch of things, but can't be true for everything. Otherwise, at some point 100 years from now, we're comparing our life values and end up saying something like "I like money too". Everything can't all be reduced down to the lowest common denominator. If it does, we all die.

Life is not a bit or byte, a number to be optimized. It's meaning we define ourselves, in ways we should not quantize.

Platforms, by their very nature, constantly send out the subtle message: This is a solved problem. No further effort on your part is required here. No thinking needed. Platforms resist change. They resist their own evolution by subtly poisoning the discussion before it even starts.

Are restaurant choices more or less important than which movie to watch tonight? There's no right or wrong answer to these questions. We have nice categories like restaurants and movies because currently people consider those things to be different kinds of choices. But why? If the algorithm is king, why shouldn't an algorithm determine both of those things for me? And if it does, why should I bother with worrying about which category is which?

Human brains are not computers. Let the platform decide. Energy not needed. Dump those neurons.

This is the more important point. It's not that the platforms turn what might be complex things into simple numbers, or even that they monetize attention. It's that by turning everything into numbers, over time they destroy the distinction between the categories entirely. Platforms are the enemy because they resist analysis in the areas they dominate.

Platforms turn into settled fact things that should be open for debate, like whether or not Taco Bell is a Mexican restaurant, or whether Milo is an artist with something useful to tell us. (I'm going with "no" and "no" for both of these.) More dangerously, they do the work of deciding what categories various things go into. This category over here is important. That category over there is not. We all make these decisions, and they're all different, and the categories each of us pays careful attention to and loves obsessing about are all different, and because we all have different viewpoints and priorities humankind advances in thousands of directions simultaneously. We survive. We evolve.

Twitter has to decide whether PERSON_X can speak or not because on the Twitter platform, that question has to have a yes or no answer based on the person. Twitter's category for deciding who can speak is "who is that?" Is that the right category for social conversations? For political conversations? For conversations about philosophy? Math? Who knows? Who cares? Twitter has decided. Solved problem.

Everybody has different things they like doing and thinking about. Different conversations and audiences have different criteria. Some problems should never be solved. Or rather more directly, some problems should never have a universal answer.

An aside: We see the same thing in programming. One bunch of folks creates various platforms in order to do the thinking for another bunch of folks. Sometimes these platforms take off and become industry standards. That's quite rare, however. Most of the time we end up training morons who can weakly code against the platform but can't reason effectively about the underlying architecture or reason for the platform to exist in the first place. In our desire to help, we harm the very people we're trying to assist -- by subtly giving them the impression that this is a solved problem. Programmers are just a decade or so ahead of the rest of us.

Popular platforms aren't just a danger economically because they control commerce. They're not just a danger politically because they selectively control and amplify political discourse. They're an extinction-level, existential danger to humans because they prevent people from seriously considering what kinds of categories are important in each of their lives. They resist their own analysis and over time make people dumber. Right now we're skating through the danger because we're harvesting people from less-advanced countries to do our hard-thinking for us. That window is quickly drawing to a close.

AI isn't a clear and present danger to our species because we're going to end up fighting the robots like in Terminator. AI is a clear and present danger to our species because it might end up doing exactly what some of us want it to do: become a brain extension.

If we can't fix this, we're all going to die.

The Idiocracy starts here. It starts now.

Comments ()